AI Reasoning: Balancing Generality and Reliability

In the ever-evolving landscape of artificial intelligence, the potential for transformative impact is monumental. According to Goldman Sachs, AI has the potential to exert a staggering $7 trillion influence on the global GDP. However, as AI rapidly advances, a new challenge emerges: the delicate balance between generality and reliability in AI reasoning.

The generality in LLM systems is quite impressive. They are pretty great at learning from data and applying what they have learned to new data. For example, you can use the same AI algorithm to analyze healthcare data, e-commerce data, or insurance data. But current LLM systems still have a lot of limitations, especially when it comes to complex tasks. The main reason for these limitations is that they cannot reason, also known as System 2 Intelligence.

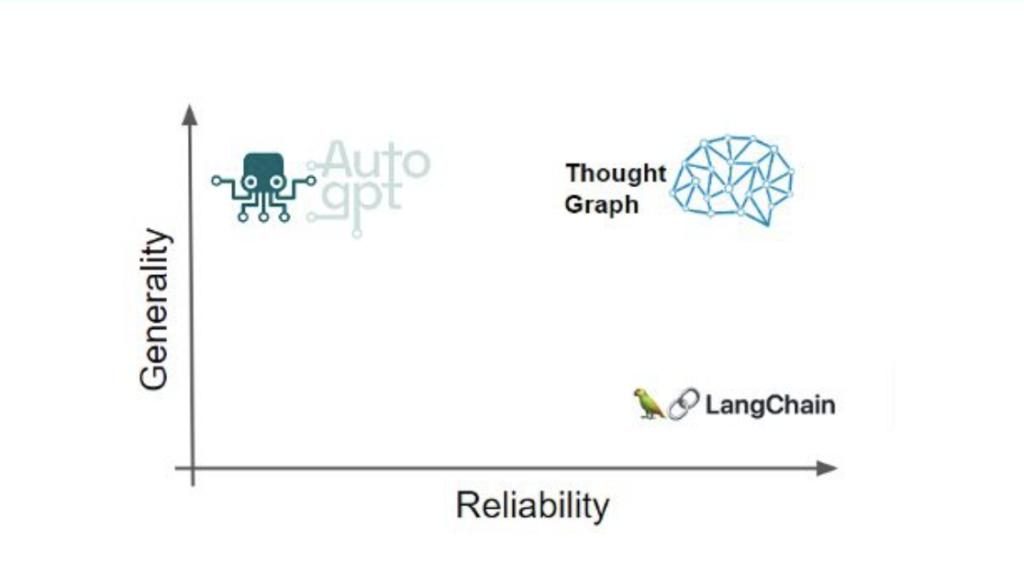

An ideal reasoning module can break down complex tasks into simpler ones, retrieve relevant information in case it does not have enough information, or use other non-AI tools to fulfill user queries if needed. To address this problem, we often put LLM systems inside traditional software structures. One example of this is the LangChain tool, along with a few other projects. This is a bit like putting a coarse-grained reasoning system into standard software. But this approach limits the generality of AI systems.

On the other hand, there are agentic solutions. These approaches work probabilistically, which means they do not follow strict rules and they are quite generic since they use LLM models themselves for reasoning. Projects like WebGPT, AutoGPT, and ReAct fall into this category. However, they are not reliable enough for production applications, especially enterprise-grade ones.

Sure, these two paradigms are influential, but they are not the only ways to explore AI reasoning. The truth is, the lack of a versatile and dependable reasoning engine is a major bottleneck that is holding back countless AI initiatives. It is keeping them stuck in the demo phase, and it is preventing them from delivering a seamless user experience in production systems.

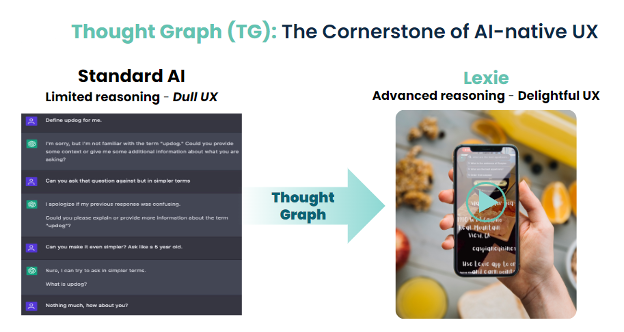

Introducing Lexie: Enhancing AI Reasoning with Thought Graphs

Meet Lexie— a new AI that uses a reasoning representation called Thought Graph (“TG”). The TG and its framework neatly separate the complexities of AI reasoning into four distinct parts:

- Generating a reasoning space

- Searching the reasoning space for the best and most reliable option

- Executing the reasoning

- Refining the reasoning with human feedback.

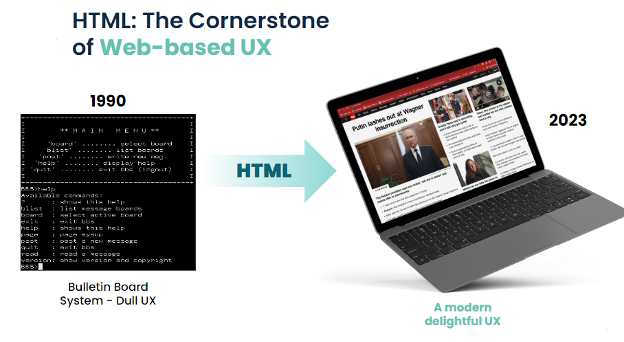

This separation gives us the flexibility to build a range of reasoning abilities (not just two extremes), striking a careful balance between reliability and generality. In our opinion, a reasoning representation like TG is as essential to AI applications as HTML is to web development.

An Open Representation for Reasoning

TG has been a huge win for us, and it’s now an essential part of our system. It gives us the flexibility to use a variety of reasoning methods, and we can implement essential safety mechanisms no matter which method we choose.

We have been in conversation with a number of industry experts who are working on some very innovative AI projects. Their collective insights have led us to a clear conclusion: open representation of reasoning is the way to go. That is why we are building a community of thought leaders to enrich our Thought Graph and make it open source in the future.

Upcoming Blog Series and Call to Action

We are excited to share a series of blog posts in the coming months that will dive into the insights we have gathered from over two and a half years of building and refining the Thought Graph and deploying it across diverse applications. Whether you are just starting out on your AI journey or you are a seasoned pro, we think you will find this series informative and thought-provoking. Stay tuned!

If you believe in our vision of creating an open representation for reasoning, we would love to connect with you. Please subscribe to our blog series and become a part of the vibrant discourse that is shaping the future of AI reasoning. Together, we can create a future where generality and reliability seamlessly coexist in AI reasoning.