Friends of Lexie Blog Series - July Edition

Thanks for your interest and support! We’re dedicated to making every app AI-native in a reliable way. Current AI models and LLMs are not reliable enough for enterprise applications, and their behavior is hard to analyze or explain. We’re working hard to change that.

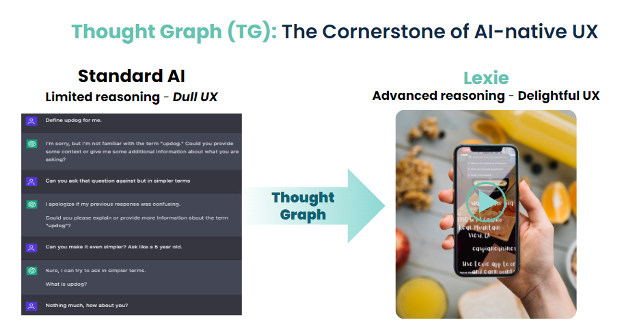

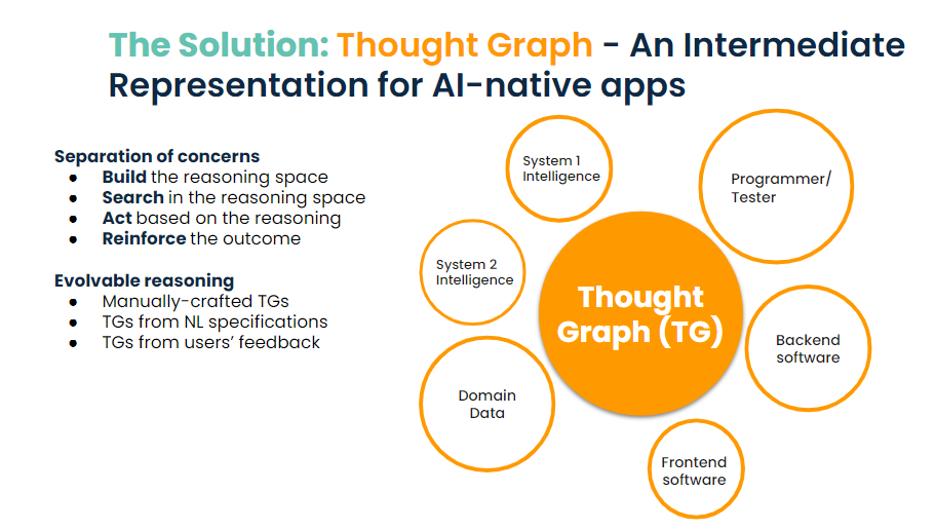

We’re taking a graph-based approach to grounding our AI model. This representation lets different parties (programmers, product designers, human auditors, software modules, and AI models) analyze, understand, and improve the reasoning. We believe this representation, which we call Thought Graph, will be the foundation of AI-native applications, just like HTML is the foundation of web applications.

As we expand our “Friends of Lexie” list, we would like to welcome you to receive updates about our exciting venture. Please feel free to review our introduction to Lexie in this blog. If you have any questions or feedback, please contact us. We hope you enjoy this article.

Market Demand for AI-Native Apps

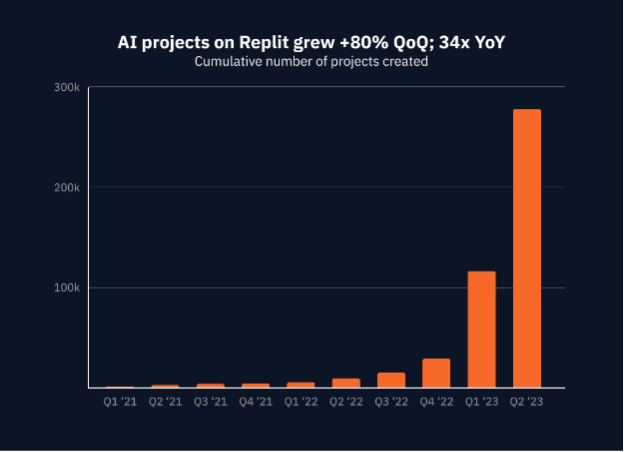

Replit\’s data indicates that the number of AI projects on their platform has increased 34-fold since last year. A Databricks survey of 9,000 organizations also shows that almost every CEO is asking their business units to develop an AI strategy. Our observations from the market are similar. Our customers want to use AI in their customer support and content creation. They want to deploy AI-native solutions quickly and without allocating too many of their resources.

Lexie Updates

Product Highlights

We learned that our customers are interested in using our internal development tool – Thought Graph Low-code to customize their applications. We have decided to add this tool as part of our offering as well. Please feel free to watch the demo below.

Introduction to Lexie

Lexie is a groundbreaking startup poised to transform the way we interact with applications. With our cutting-edge low-code technology, we are making every app an AI-native app. It can increase the productivity and efficiency of building apps by 20x.

Elevating User Experience with a Multi-Modal Reasoning Engine

Lexie was founded in 2021 with the vision of building a platform that would allow developers to transform every application into an AI-native application, where users can interact with the application using natural language. The AI agent (initially referred to as an “overlay bot”) performs the necessary reasoning and drives the application automatically, skipping unnecessary user interactions and creating novel UIs as needed. The company was an early entrant into the market. Therefore, we decided to build applications using our development platform rather than presenting the platform itself as the product. Lexie built multiple co-pilots/chatbot agents for its e-commerce customers.

The launch of ChatGPT resulted in a substantial increase in interest in our technology. Customers were impressed by the speed at which Lexie was able to develop software, even though building AI-native software is more challenging than developing traditional software.

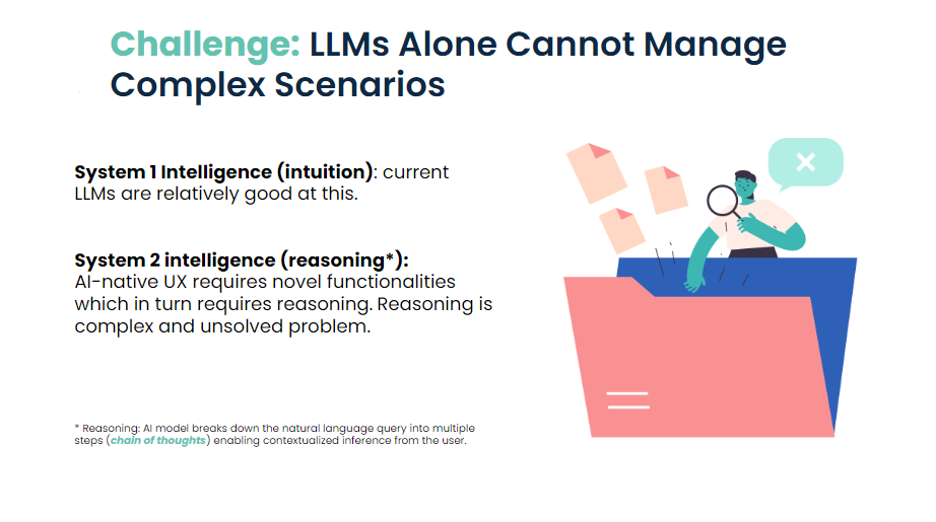

Why is Reasoning Important for AI-native Applications?

If you follow the advancement of AI research you probably know that reasoning in a generic sense is one of the most important areas that top minds are working hard to solve. Examples are Tree of Thoughts and Thought Cloning which are using existing AI models to improve the reasoning and Self Supervised Learning and Generative Flow Networks which are using completely new models to improve the reasoning.

When it comes to generating and driving the user experience, LLMs have similar shortcomings and therefore we need a robust reasoning engine. However, there are three main considerations:

- Since we are solving the problem for a particular domain, we can leverage a fine-tuned version of existing AI models grounded using domain knowledge.

- Our main goal is to build a model for a software application to predict the right action(s) given a user command. Software apps have a good amount of documentation and source code that can help to build such a model.

- Collecting interaction data and user feedback is as important, if not more important, as the AI model. Such data will be key to improve and evolve the reasoning model.

We recommend that our customers deploy a reasoning engine as part of their applications today, as it is highly reliable and enterprise-ready for basic UI interactions. This will allow them to collect user feedback and improve the reasoning for natural language interactions over time, which we refer to as evolvable reasoning.

Currently, every software application consists of three important components: front-end, back-end, and data layer. We believe that all future applications will have a reasoning engine as the fourth component.

Thought Graph - A Representation for Reasoning

Thought Graph is an intermediate representation of reasoning. We think Thought Graph will be the cornerstone of AI-native applications the same way HTML became the cornerstone of web applications. A Thought Graph server is a reasoning engine that enables applications to process natural language queries, even complex ones. It does this by breaking down the reasoning process into four steps:

- Generation of the reasoning space including retrieval of supplementary knowledge

- Search in the reasoning space to find the best reasoning alternative

- Execution of the chosen alternative(s) for reasoning

- Improvement of the reasoning based on reinforcement learning

Thought Graph grounds its reasoning in business-specific knowledge and data. Customers can begin with level 1 reasoning by creating the Thought Graph for their application using our low-code tool. They can then evolve the reasoning to level 2 and level 3 reasoning (analogous to the level of automation in self-driving cars) based on detailed business requirements, as well as their users\’ feedback.

When you have the application logic in the form of a Thought Graph, different modality of the applications including web application, chatbot, voice agent, or a mix of them is automatically generated.

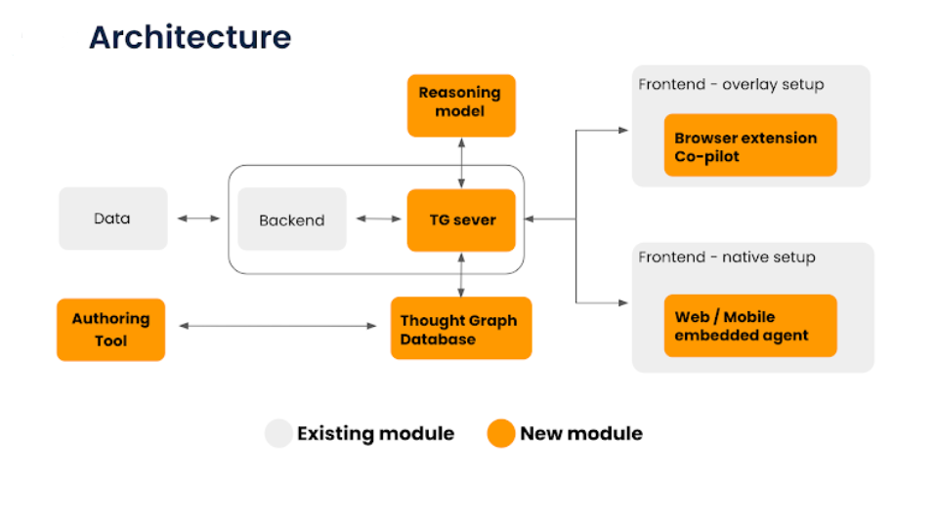

The standard architecture for deploying Thought Graph is demonstrated below. Our Thought Graph server sits between the front-end and back-end of the application and leverages Lexie’s proprietary AI model as well as the Thought Graph database to fulfill the application requests.

Lexie vs Other AI Middlewares

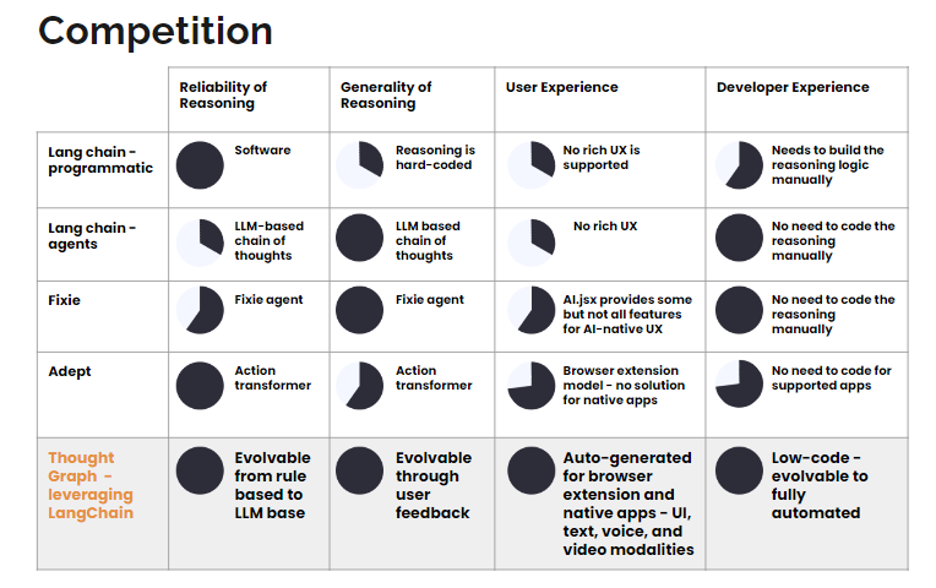

Lexie is leveraging open-source projects like LangChain and LlamaIndex. Developers can choose to use LangChain alone (without using our Thought Graph server) to develop their applications. The other options are using platforms like Fixie or Adept. Our Thought Graph is different from the competition in several ways.

- First, Lexie offers a superior user experience for different modalities – all auto-generated from the same Thought Graph.

- Second, Thought Graphs can leverage the generality of AI models for reasoning, making them more versatile than other platforms.

- Third, our low-code technology enables rapid development of AI-native software.

- Finally Thought Graph architecture is designed for enterprise applications, making it more reliable, analyzable, and scalable than other platforms.

The following figure depicts Lexie\’s competitive advantage with respect to generality and reliability, as well as user experience and developer experience.

Upcoming Blog Series and Call to Action

We’re excited to share a series of blog posts in the coming months that will dive into the insights we’ve gathered from over two and a half years of refining the Thought Graph and deploying it across diverse applications. Whether you’re just starting out on your AI journey or you’re a seasoned pro, we think you’ll find this series informative and thought-provoking. Stay tuned!

If you’re down with our vision of creating an open representation for reasoning, we’d love to connect with you. You can subscribe to our blog series through this link and become a part of the vibrant discourse that’s shaping the future of AI reasoning. Together, we can create a future where generality and reliability seamlessly coexist in AI reasoning.